CI/CD at Cleo

Claire, a Backend Engineer, outlines what CI/CD looks like at Cleo.

This is some text inside of a div block with Cleo CTA

CTASigning up takes 2 minutes. Scan this QR code to send the app to your phone.

Our Marketing Analytics team give an insight into how we built a Marketing Mix Model in-house.

Click-based attribution has become less reliable, with tracking partners restricting the data we can receive from channels. This has reduced the reliability of our attribution model, which is based on a last-touch approach. Therefore, we decided to develop a Marketing Mix Model (MMM) as an alternative attribution method at Cleo and to guide our monthly budget planning.

In this post, I'll share our experience in building an MMM in-house and what you need to know if you're planning to do the same.

MMM (Marketing Mix Model) is a statistical approach to model the outcomes of marketing investment. It helps us understand the incremental impact of our marketing investment in each channel on the KPI/goal metric/target metric or target variable that matters. This KPI/target variable can be anything your company cares about, such as revenue or total new users.

There are several use cases for MMM:

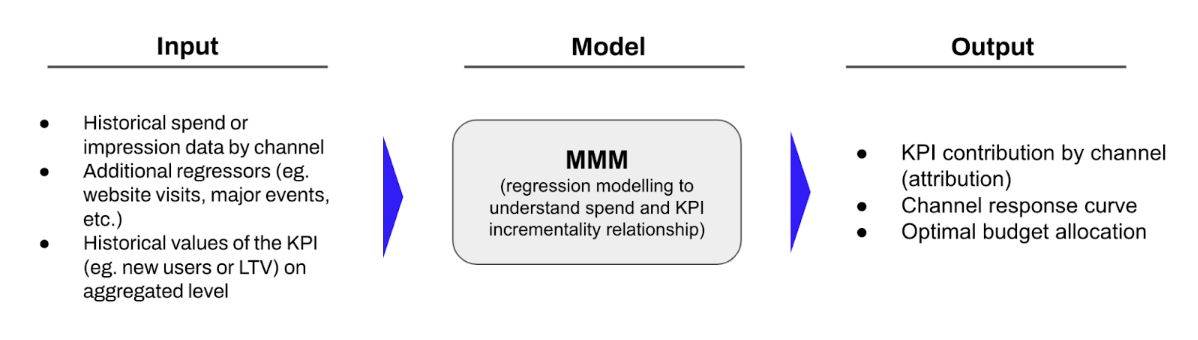

The figure below provides an overview of how MMM works:

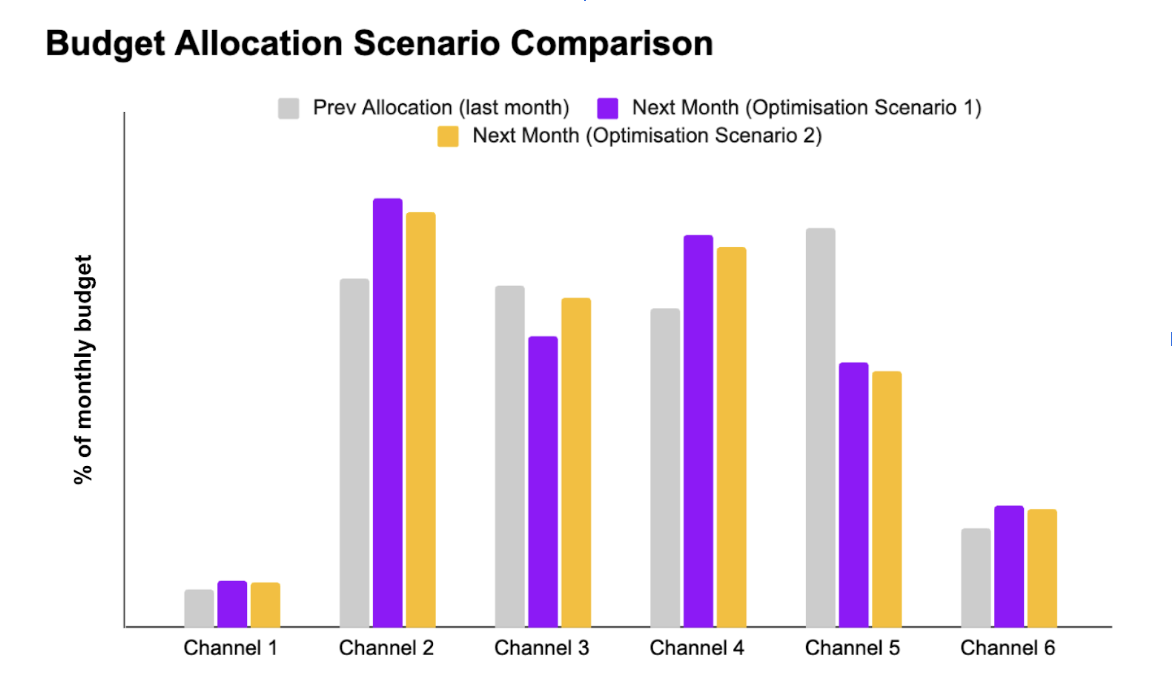

At Cleo, we use the MMM output as part of our monthly budget planning process. Every month at the end of month, we run the budget optimisation feature of MMM to inform us how we should allocate our budget across channels, as illustrated below. After the end of each month, we also run MMM to understand the attribution from the previous month to ensure we're going in the right direction.

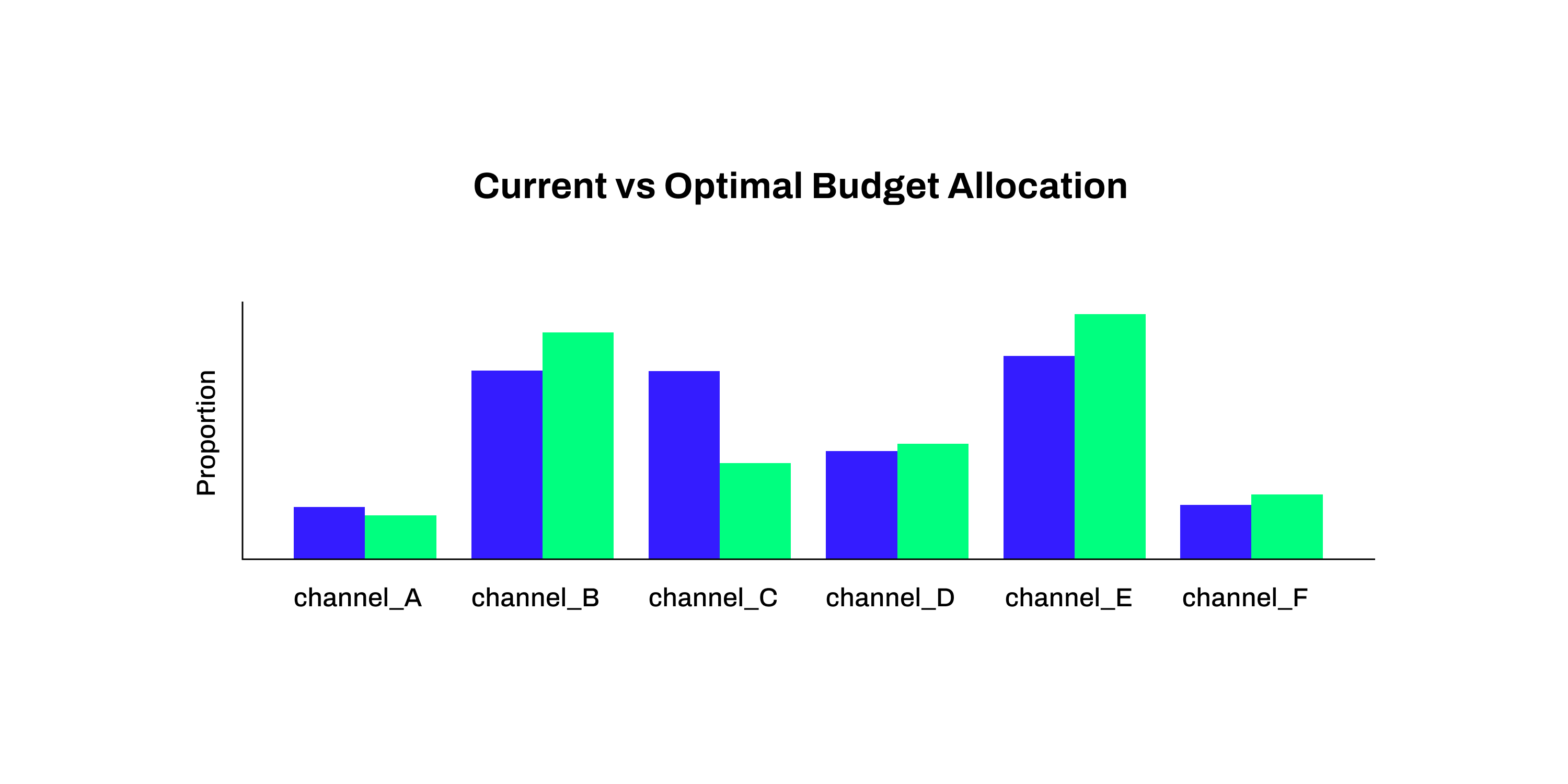

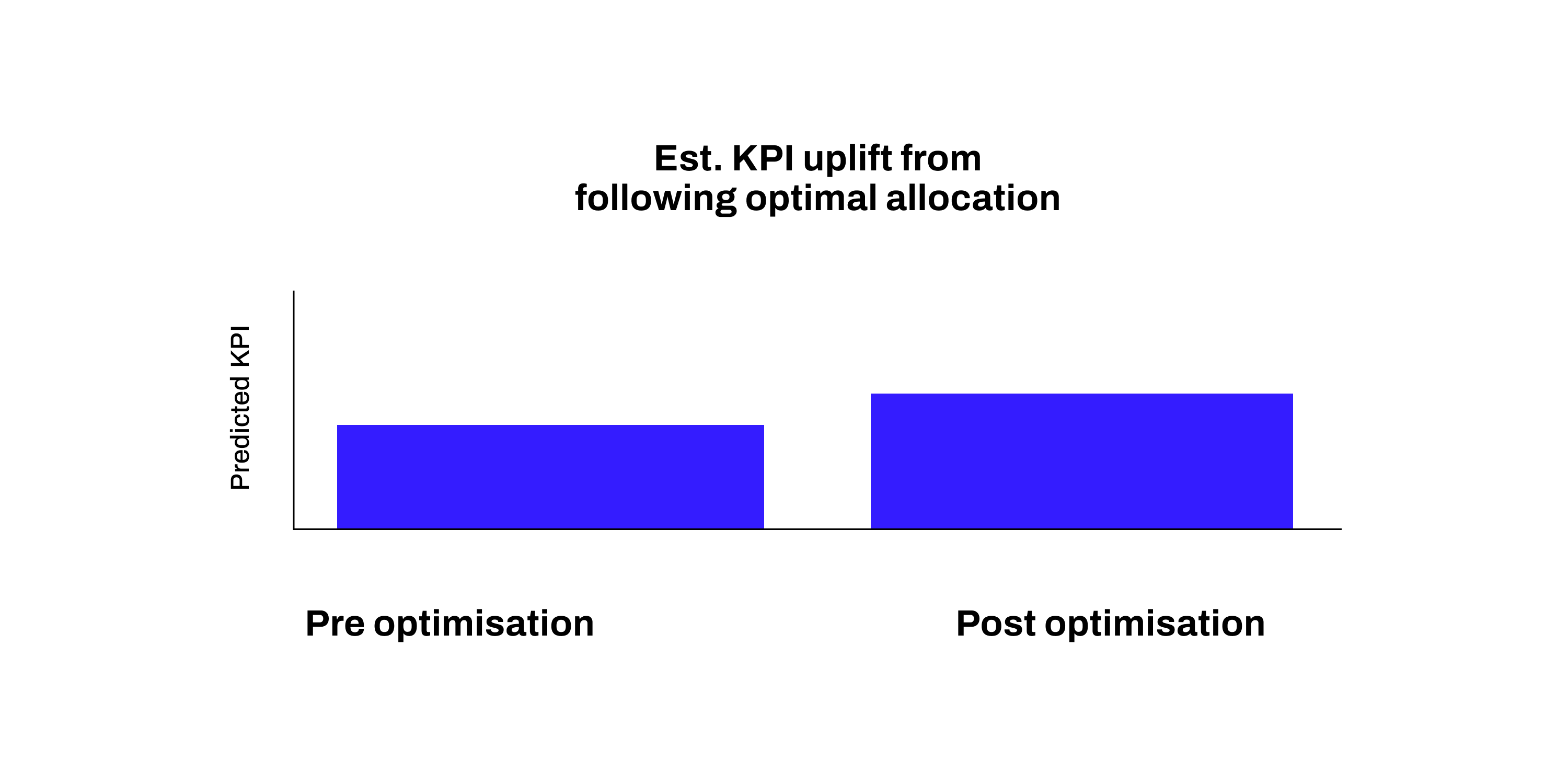

You can see from the charts below how using MMM has changed our budget allocation per channel, and the KPI uplift we've seen as a result:

Generally, there are three options to implement MMM:

There’s no “best” option as it will depend on your company situation. Hiring an agency / buying MMM products would be a straightforward solution for most startups. But some things you might want to consider include the capacity and domain expertise of your data and marketing team, your data/reporting maturity stage, your timeline, as well as your long-term marketing plan.

We eventually decided to built it in-house because of several reasons:

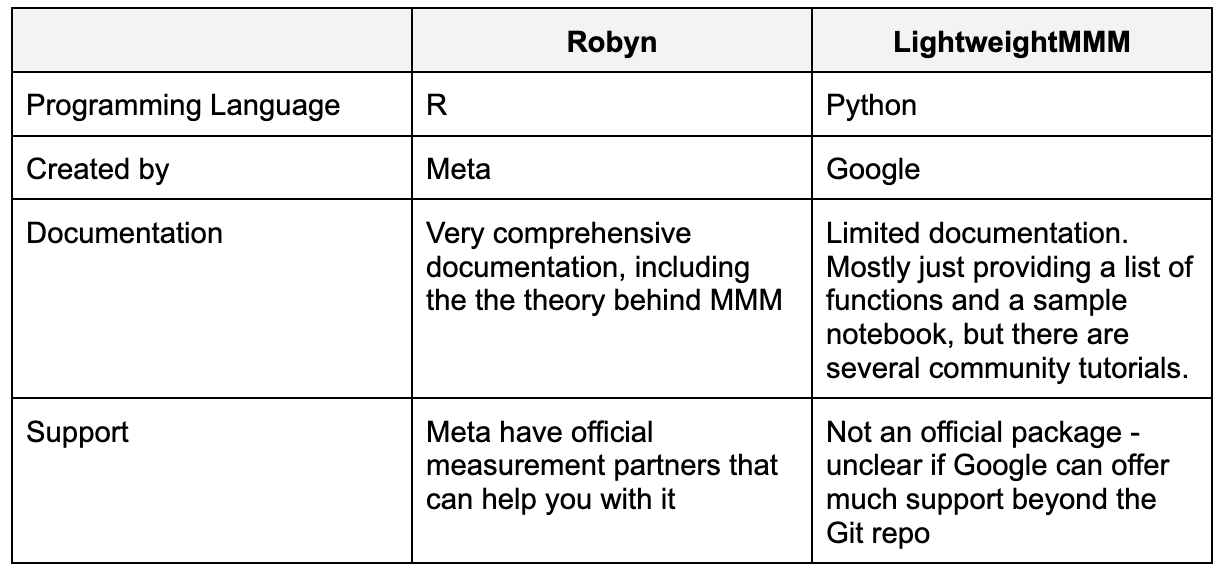

To develop our MMM, we looked into LightweightMMM and Robyn (both open source packages). Their features are fairly similar but we decided to go with LightweightMMM because we have more people who are Python experts in our data team. Here's some basic info you might want to consider if you need to choose between these two packages:

Building MMM wasn't a smooth sailing process. We encountered several challenges that needed addressing. Here are four challenges for building MMM yourself along with some tips on how we tackled them.

Any predictive model is only as good as the data you fit into it, and MMM is no exception. To ensure your model have a great set of explanatory variables:

Performance marketers are very data-driven. When their channel performs well, they put more money into it, so it's not uncommon for several channels' spend to show a collinear trend. As MMM compares spend movement vs. KPI change to try to predict the incrementality of each channel, this multicollinearity creates an issue.

Some ways we tackled this issue:

MMM doesn't work well for channels with many periods of zero spend. This is because MMM relies on the variability of spend or impression data from each channel to understand how it's driving the output KPI, so a lack of spend variability prevents the model from making comparisons across different spend levels for the channel.

There are a couple of options to deal with this:

The tricky part with any attribution method is that you'll never know the truth… at least not until you run an incrementality test! You might be able to evaluate the model quality with metrics like MAPE (Mean Absolute Percentage Error, which measures the mean absolute percentage difference between the model's prediction vs actual value) or r_hat statistic (this measures the convergence or reliability of your bayesian model, you'll want this to be close to 1 and no greater than 1.1), but they’re not a substitute for the truth of how much to spend on each channel.

So, while MMM gives you the comfort of having your attribution and budget decision backed by data and model, you can never be 100% sure that its attribution is the truth and its budget allocation recommendation is optimal to the cent.

We ran into these observations from the MMM we developed:

It’s not enough to just build an MMM; you have to run experiments and find a balance between relying on MMM and occasionally running tests. Although incrementality tests can sometimes be impractical, they're still the only way to calibrate and validate your MMM.

If you're interested to learn more about incrementality tests, we published a post about our geo testing approach here.

Interested in joining us at Cleo?

Claire, a Backend Engineer, outlines what CI/CD looks like at Cleo.

Benj, Head of Data Science, explains how we're using the latest advances in Large Language Models (LLMs) to enhance Cleo's chat.

Sara is a Frontend Engineer here at Cleo. She outlines how she's using AI to streamline her workflows.